Software is always changing and evolving, which means that the app infrastructure that supports it has to evolve with it to cope with the changing requirements. This article intends to describe what a modern application infrastructure is and what elements it should include. Once that’s clear, we will go through best practices in modern application development, the mistakes to avoid, and try to define some rules that can help you define the infrastructure that will fit your application the best.

What is a modern application infrastructure?

When talking about modern applications, we may think about new technologies and coding languages (React, Golang, NodeJs, Scala…) but the reality is that what defines an application is the architecture. Event-oriented architectures with modular architectural patterns, asynchronous communication, messaging between components, streaming, when needed. These elements are the ones that can tell us if the application is modern or not. We can always develop a monolithic application using NodeJs and that won’t be a modern application.

Once we know what our modern application looks like, we need to describe what is expected from the infrastructure. First of all, modern application infrastructure needs to provide availability 24/7 worldwide, it needs to be adaptive to cope with changes in load; it has to be resilient to react to failures, and it needs to be cost-effective. Another key aspect of modern infrastructure is the possibility of being included in the same agile processes that the application development itself follows.

When going through all these requirements, the first thing that comes to mind is Cloud, and the reason for that is that we can create infrastructure there very quickly and leverage key principles like automation, Infrastructure as Code (IaC), and DevSecOps without investing in our own infrastructure across the world.

Application Infrastructure Overview

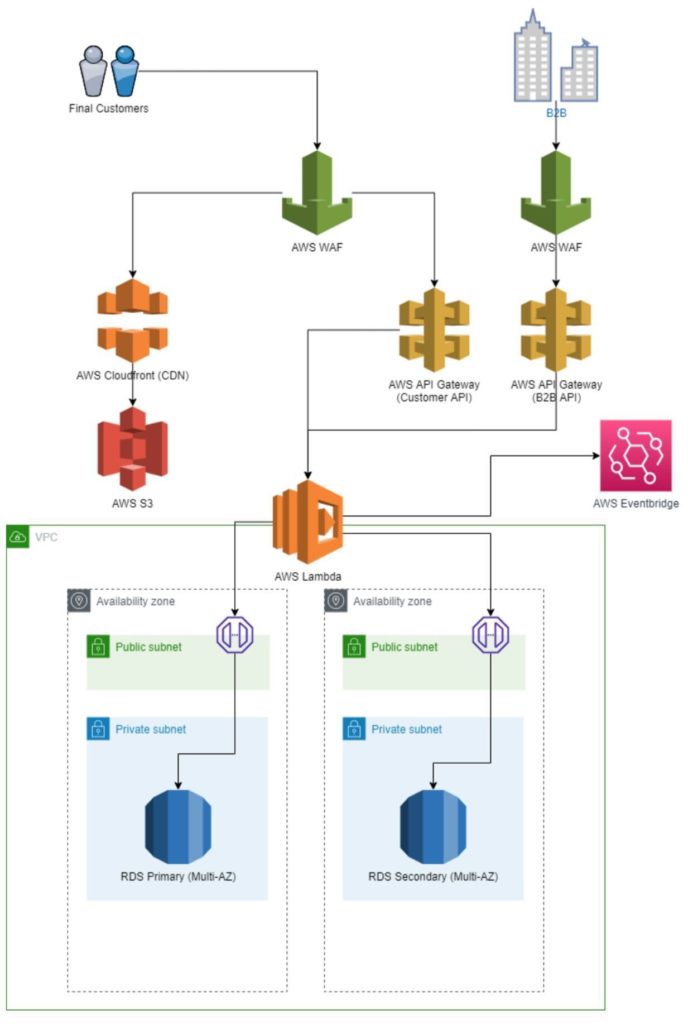

Once we have our application infrastructure requirements definition, a modern software infrastructure, in most cases, will include several of the following elements:

- World wide distributed frontend (CDN deployment of our JavaScript)

- Microservices containerization (Kubernetes, AWS Lambdas/Azure Functions)

- Relational and non-relational databases (MySQL, PostgreSQL, MongoDB, CouchDB)

- Messaging between components (Kafka, RabbitMQ, Spark)

- API Gateway (Kong, cloud providers products)

- Identity management with identity providers (Azure AD, Amazon Cognito, Keycloak)

This is just a list of components that for sure you have heard of, so let’s try to draw a process to create the actual application infrastructure design.

Application Infrastructure Design Process

When designing an application infrastructure, we will need to answer these questions:

- What type of application do we have?

- E-commerce site? Lots of concurrent users at peak but usage valleys

- Data-intensive? Long processes manipulating/categorizing data

- Critical systems? Healthcare, urgencies, banking.

- What layers does the application have?

- Presentation (GUI)

- Application (business logic)

- Data (Databases, storage…)

- Is it worldwide?

- Customer base is across the world

- Does it provide a public API to integrate with others?

- What are the security levels needed inside?

- Is it subject to a PCI DSS certification?

- ISO 27001?

- Can we afford a failure in the system?

- High availability

- Disaster recovery plans

Once we have answered these questions, we can define our application infrastructure model based on our requirements. In general, our application infrastructure model will have the following pieces:

Infrastructure Definition

We need to describe our infrastructure in a place that we can refer to in the future for changes. This has been typically done in documents and diagrams, but with Infrastructure as Code, we can leverage to simplify the documentation and also be able to track changes and evolution in the future.

So we will have, the same way we have our code repository, our infrastructure code repository (typically a git repo). We will write down the resources we will create there using languages like Terraform, CloudFormation, Ansible, Azure Blueprints…

App Infrastructure Delivery Process

As important as how we will define our infrastructure is, we need to have our infrastructure delivery process. This needs to be aligned with the Software Development Life Cycle since application changes may require infrastructure changes. This is typically done by creating infrastructure creation pipelines in tools like Azure DevOps, AWS CodeDeploy, Github Actions, Jenkins, Bitbucket pipelines etc. In the end, the aim is to create an infrastructure in an automated and repeatable manner so no “manual” changes are involved. That way, we can ensure nobody is “forgetting” anything when operating the infrastructure.

Deployment strategies

Linked to the infrastructure delivery process, we have the deployment strategies that will be used to deliver the software. The application infrastructure needs to be capable of coping with requirements like handling blue-green deployments, canary releases, or primary-secondary deployment schemas. This will depend a lot on the requirements of the application, but the most critical part is to have an automated process to deploy and rollback in case of problems.

Scalability

Finally, it’s critical to design the infrastructure in a way that is scalable both vertically and horizontally. This is very dependent on the application architecture itself, and for that reason, its key to have a modular architecture where we can scale modules independently, vertically (e.g. a read database that needs to serve more and more analytics) or horizontally (e.g. a set of microservices that need to create more replicas to handle the load in different regions).

Best Practices for Creating Modern Infrastructure

Once we have a clear understanding of the application requirements and what design we would like to have, there are some best practices to follow when creating modern application infrastructures:

– Modularize components:

Try not to have independent software pieces running in the same infrastructure piece. For example, running your backend services together with the database in the same virtual machine can be a good idea for a proof of concept, but you will realize soon that you are creating single points of failure and you will take down the entire system even when updating single elements.

It is a good idea to run application layer services in separated elements and isolate them per functionality.

– Add caching to improve performance:

Add CDN distributions to cache static resources.

Enable some caching technologies like Redis to provide quick responses with few computing resources to queries that are repeated very often.

– Adapt the technology to your needs:

Cloud providers have lots of different services that can help you with your application. If you are starting from scratch, consider using serverless (AWS Lambdas, Azure Functions, GCP cloud functions…) to deliver value quickly.

In most cases, your load will not be big and worldwide at the first stages. It will be more important to deliver features quickly and change easily. Chose technologies that help you with that.

– The simpler the better:

Don’t overcomplicate systems by adding lots of pieces just in case in the future you might use them. It’s better to rewrite parts of the system and change the infrastructure in the mid-term, than overcomplicate the solution at the very beginning, just in case you are super successful.

This doesn’t mean that if your software needs messaging systems, streaming, or big data analytics, you should not put them in place. It means that unless you are sure you need these features, don’t do it until it becomes a necessity.

– Automate as much as you can:

Infrastructure as Code and delivery pipelines are key to replicating, changing, and modifying your app infrastructure. It’s the only way you can easily evolve the infrastructure without lots of work and manual interventions that normally lead to big maintenance windows, breaking changes, and more.

– Monitor from the very beginning:

Cloud infrastructure comes with a challenge. Monitoring and it’s observability is key. It’s important for you to instrument all the elements of your application and send all logs and metrics to a centralized system. That will help to create alerts and to prevent problems.

Tracing becomes critical since it’s the best way for you to know the evolution of requests and messages through the system. There are open-source frameworks like OpenTelemetry that help you in this process and will provide you with lots of information about what’s going on inside your infrastructure and applications.

Take advantage of services like AWS Cloudwatch, Azure Application Insights, Log Analytics, GCP Cloud monitoring, etc. They will easily integrate with your infrastructure and will give you lots of metrics from scratch.

– Utilize Availability Zones Deployment

Take advantage of availability zones deployment to be able to handle disasters in cloud providers’ CPDs. Don’t think that cloud provider regions don’t fail. Even if it does not happen very often, it can affect your business massively. Cloud providers normally don’t guarantee SLAs higher than 99.99% in single availability zone services. That may not be enough for you and your business.

– Use container orchestration technologies

If you have a backend service layer with lots of microservices and different loads in all of them, container orchestration technologies can help a lot. They will enable you to adapt quickly and accurately to your performance needs.

– Use asynchronous messaging systems to communicate your services.

One of the biggest challenges you will face in modern application infrastructure is to understand the communication patterns across services and to know why some services are struggling to handle the requests they have. Synchronous calls, unless they are mandatory because of the nature of the request, hide a little of the services that are having problems.

Messaging systems also help you know which queues/topics have more requests, how long it takes to get responses from the listener, and help in tracking system issues.

– Security from the beginning.

Adding security layers while the system is already running is difficult, and may break your system.

If you have a web application or REST APIs, a Web Application Firewall is mandatory. This will protect you against the most frequent and easy attacks. API Gateways come with features like rate-limiting. It is essential in public APIs to be able to limit the number of requests you may receive from a single source. This way, a single client will not exhaust your resources.

Ensure you have bot protection enabled in your WAFs and make use of CDNs. This will help you control bots and limit DDoS attacks. Understand that Cloud provider DDoS products are more like insurances than actual DDoS protection since they are the most interested in your infrastructure not suffering that type of attack. Additionally, you should:

- Follow the fewer permissions necessary principles.

- Isolate networking layers (e.g. Web servers should only have access to the application servers and never directly to the data servers).

- Limit outbound connections and monitor unusual activity inside your networks.

- Use Virtual Private Endpoints to connect to cloud services and encryption at rest for secure communication with cloud services.

- Enable security audits. If you are using containers, vulnerability assessment tools like Qualys, Sysdig Aquasec, etc. can help.

– Finally, adapt the technologies to be used to the skills your team has.

If your engineers are good at using AWS EC2 instances and auto-scaling but you would love to design a fully serverless system with AWS lambdas because you believe it’s ideal for your needs, it’s better to find somebody expert in that and train your team, than trying to learn on the job because big mistakes can be made and your infrastructure will struggle to perform.

Web Application Infrastructure Example

A basic example of a Web Application Infrastructure in AWS could be the following:

If you have any questions regarding the article, or require additional information on the topic, please feel free to reach out!

Author: