AI Cheatsheet #3

In the first edition of the AI Cheatsheet, we looked at how LLMs are formed and gave an overview of crucial components:

Context

Prompt with relevant info

Prompt input = Perception

Reasoning

Model and configuration

Trained knowledge + prompt memory = Decision making

Conclusion

Action (give answers, use tools)

Non-deterministic = Same input, different conclusion

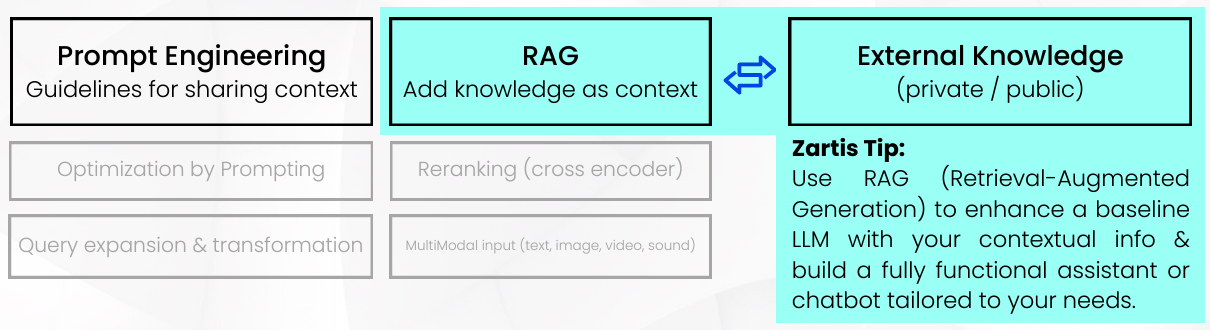

In the 2nd edition, we took a deep dive into ‘Context’ and prompt engineering to improve it. However, that is not the only way to improve Context. One of the most powerful methods that can improve the accuracy of the Output is Retrieval-Augmented Generation (RAG):

RAG - Retrieval-Augmented Generation:

Retrieval-Augmented Generation (RAG) optimizes the language model output by referencing external knowledge bases. It extends Large Language Models’ capabilities to specific domains without retraining, enhancing relevance and accuracy.

The benefits of Retrieval-Augmented Generation

LLMs power AI chatbots but may present false or outdated information, impacting user trust. RAG addresses these challenges by retrieving relevant data from authoritative sources.

RAG benefits include cost-effective implementation, access to current information, enhanced user trust, and more developer control over chatbot responses.

Access to current information

Ensure your chatbot responses are always based on the latest data and insights.

Enhanced user trust and experience

Build trust with users by providing accurate and up-to-date information that serve their needs.

Cost effective implementation

Reduce development and maintenance costs compared to traditional chatbot solutions.

More developer control over responses

Fine-tune chatbot responses to perfectly align with your brand voice and messaging.

Different use cases with tech & tools suggestions

Here are some use cases that are already being leveraged by many companies:

Use Case | Description | Tooling * |

Customer support automation | RAG can power sophisticated chatbots and virtual assistants that provide detailed, contextually relevant answers to customer queries. | Zendesk AI Enabled Copilot Studio Google Dialogflow Rasa |

Legal and compliance research | In fields requiring significant research and reference to existing laws and regulations, RAG can help automate the retrieval and synthesis of legal texts. | Casetext LexisNexis Westlaw |

Education tools and e-learning | RAG can enhance educational platforms by providing detailed, accurate explanations and tutoring assistance. | |

Medical information systems | RAG models can provide clinicians and researchers instant access to medical literature and clinical data, aiding diagnosis, treatment planning, and research. | DataRobot Druid |

Product development and innovation | RAG can generate new ideas or improve existing products by retrieving and incorporating feedback from various sources, such as customer reviews, technical forums, and product databases. |

* Every tool must be evaluated to determine its suitability for a particular use case within a specific company or domain. It is essential to consider the unique aspects of your company’s infrastructure when selecting tools. We encourage you to discuss your specific needs with us, allowing our team to offer guidance that is customized to your requirements.

Other well-known products making use of the RAG model:

Vector databases VS existing data stores

Did you know you may not always need a vector database to improve your model responses?

RAG models combine the power of language understanding with the ability to retrieve relevant information from a database during the generation process. This means that if you already have a robust database or a structured data storage system, it can be utilized directly by the RAG model to enhance AI output without the need to build or maintain a separate vector database.

- Using existing databases can significantly reduce implementation complexity and costs.

- It allows organizations to capitalize on their current data assets and infrastructure, while also speeding up the deployment process of AI capabilities.

- This approach also facilitates a more seamless integration of AI into existing workflows and systems, minimizing disruption and maximizing the utility of both new AI technologies and existing data resources.

Zartis Tip: Use vector extensions in the data stores you already use or build your vectors and store them alongside the existing data.

Scenarios where you may not need a custom Vector database:

In certain setups, as long as your data quality and consistency permit, you may not need to use embeddings or a vector database. You can simply pass your existing data directly to the model and allow it to generate the best responses.

Consider a search bar for an e-commerce site that uses metadata to enrich product descriptions, which is then used by a model to search this enriched data. Any similar approach that leverages metadata can enhance the model’s knowledge without requiring a separate database.

Similarly, a travel web application can enrich destination details with user feedback. For example, if kayaking is frequently mentioned with positive sentiment for a specific destination, the travel company can use the model to recommend that location to someone searching for kayaking opportunities and even provide reasoning for why it is a suitable destination for the sport.

This approach not only reduces technical complexity but also improves the model’s performance by utilizing well-maintained and rich datasets that the organization already possesses. Additionally, leveraging existing data infrastructure ensures that the data used is relevant and tailored to the specific needs and context of the organization, which can significantly enhance the effectiveness and accuracy of the AI’s outputs.

Zartis Tip: Thoroughly analyze the data you have, as certain parts or chunks can be contextualized, enabling a RAG-based search and analysis that effectively improves your product’s behavior.

When not to use RAG

Retrieval-Augmented Generation (RAG) presents a revolutionary approach to natural language processing (NLP) and it excels in dynamic and knowledge-intensive NLP tasks. However, there are scenarios where alternative solutions may be more suitable:

In tasks where the emphasis lies on creativity

For instance, generating an email to be funny, surprising, or have a unique voice. While RAG excels at factual accuracy, it might struggle to generate fresh ideas that resonate with a large audience.

In cases where speed is key

Like a large broadcast where signal sending time matters down to the miliseconds. Since RAG retrieves relevant information before crafting a response, it takes a two-step approach that can add a delay.

Where data is scarce

RAG relies on a rich dataset where it can find relevant details regarding a given topic, but for a simpler, more direct message, a regular generative AI solution would be perfectly suited.

List of useful links and papers:

Discover some of the resources that feed our research as well as useful links to tools and papers that are fueling advancements in the AI world.

Paper:

Links:

- What is Retrieval-Augmented Generation? By AWS

- Zendesk AI Enabled, by ZenDesk

- Google Dialogflow, by Google Cloud

- AI legal assistant by Casetext

- AI-powered work tools for legal teams by LixisNexis

- AI search engine for legal information by Thomson Reuters

- AI-Powered course creator for edtech by Absorb

- Personalised customer care by Elementx AI

- AI-powered data insights for Healthcare institutions

- Healthcare Virtual Assistant by Druid

- Facebook AI, streamlining the creation of intelligent NLP models with RAG

- Salesforce Einstein 1, utilising customer data to create customisable, predictive, and generative AI experiences

- Huggingface transformers library, provided by their community

AI Cheatsheet #1

If you have questions or require additional context on any of the information shared, please feel free to let us know by using the contact form or sending an email to sayhello@zartis.com!